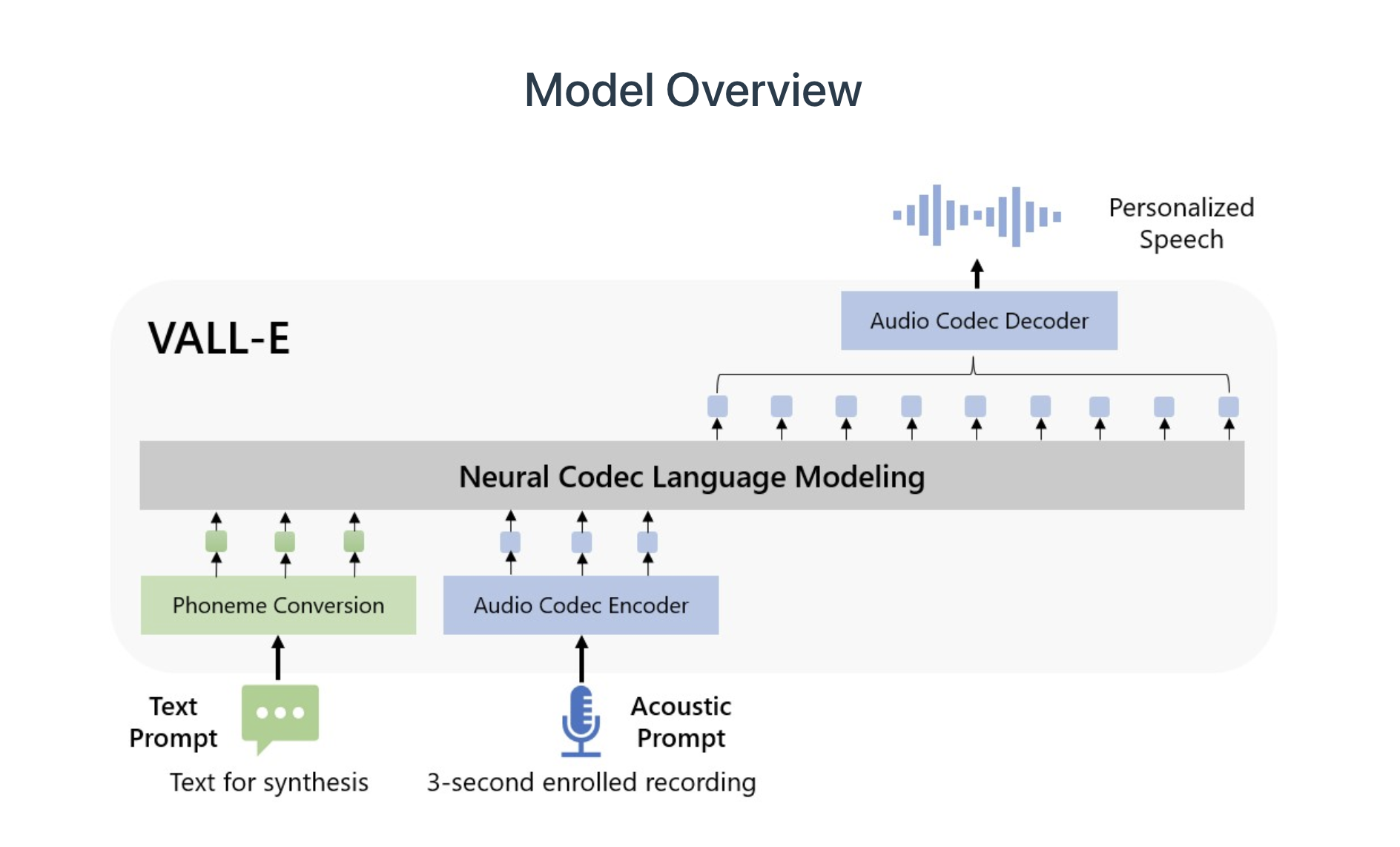

Microsoft's new AI model, VALL-E, has the ability to simulate the voice of an individual with just a three-second audio sample. The model is trained on a dataset of public domain audiobooks containing about 60,000 hours of recordings from over 7,000 individuals. This technology can be used to create high-quality speech-to-text conversions and even retain the emotional inflections of the speaker. However, it also poses potential risks if misused.

The potential benefits of VALL-E are clear. In industries such as film and animation, VALL-E could be used to replicate the voices of actors who are no longer available to dub their performances. This could save production companies significant amounts of money while also preserving the legacy of actors who have passed away. Additionally, VALL-E has the potential to make speech-to-text conversions more natural and accurate. For people with speech impediments, VALL-E could help them to communicate more effectively.

However, the technology also carries risks. For example, VALL-E could be used to impersonate someone or fool voice recognition systems. It could also be used to spread misinformation by creating fake audio recordings that appear to be from credible sources. The use of deepfake voice technology in general will also increase the difficulty of identifying the authenticity of audio content which can be potentially harmful in various domains.

To mitigate these risks, it's important for Microsoft and other companies working on similar technologies to have robust safeguards in place. This could include measures such as watermarking audio recordings generated by VALL-E, making it possible to trace back the origin of any potentially misused recordings. It is also important for these companies to proactively engage with potential users and stakeholders to ensure that the technology is being used responsibly.

Some ideas around Vall-e

Possibilities:

- It could be used in education to create interactive and personalized content, students could have better engagement with the material

- It could also be used in language learning, where people could practice speaking with a variety of accents and voices of native speakers.

- It could be used in customer service to create more personalized and natural interactions with callers, by simulating the voices of specific employees or representatives

- It could also be used in entertainment such as creating audio-books and podcast with a variety of voices and accents for a more engaging experience

- It could also be used in assistive technology for people with speech disorders, allowing them to communicate more effectively with synthesized speech that sounds like their own voice.

Risks:

- It could be used in fraudulent activities, like impersonating a person's voice in financial scams

- It could be used to create malicious or misleading audio content that could spread misinformation or fake news.

- It could be used in political propaganda and disinformation campaigns to create fake audio recordings that appear to be from credible sources

- It could be used in cyberbullying and harassment by creating fake audio recordings of someone to mock, humiliate or blackmail them

- It could make it difficult to confirm the authenticity of audio content, and may also be used to create audio that could falsely implicate someone in a crime or other misconduct.

In conclusion, while VALL-E has the potential to bring significant benefits in fields such as film and animation, and speech therapy. Additionally, the technology was trained on a large dataset, thus reaching a good level of quality and accuracy in the results, but it is crucial that the risks associated with its use are thoroughly considered and addressed. With the development of technologies such as VALL-E, it is vital that we also address the ethical and societal impact as well, for an overall responsible and beneficial use of the technology.